11/4/2022

Ingress is great, but so far our users will have to remember that an IP address to get to our site. And that IP address is subject to change if we reprovision the load balancer. DNS to the rescue!

We could create a static external IP address to ensure that the IP address stays the same. We could use a DNS A record to point our domain to the cluster's static IP. There are few drawbacks I see with this approach:

These are not major issues. A static external IP costs ~$3/month on GCP and we could Terraform the DNS configurations. And ingress rules seem unlikely to change often. However since the entire point of this is to learn about Kubernetes and how it's unique, we'll use ExternalDNS.

ExternalDNS will inspect our ingress rules and create the required DNS records using an external DNS provider like Google Cloud DNS. No static external IP. No messing with DNS configurations.

EternalDNS provides a tutorial for setting it up with GKE and Google Cloud Domains. This process closely follows those steps.

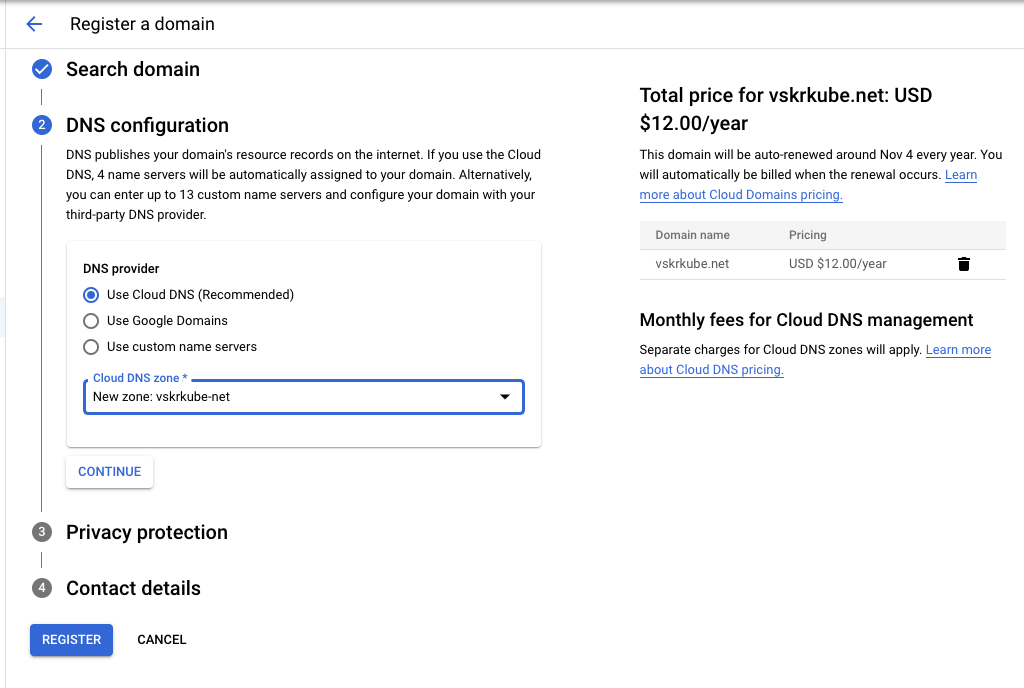

First we'll create a Google Cloud Domain. Unfortunately the official Terraform provider does not support this API. So instead we'll do it manually through the cloud console. I purchased the domain vskrkube.net.

We'll opt to use a new Cloud DNS zone as the DNS provider (the default option). That's where ExternalDNS will manage DNS records for us. Here's how that looks by default.

For ExternalDNS to work properly, we need to grant it access to Google Cloud Domain. Workload Identity is a feature of GKE that enables this. From the docs:

This document distinguishes between Kubernetes service accounts and Identity and Access Management (IAM) service accounts.

- Kubernetes service accounts: Kubernetes resources that provide an identity for processes running in your GKE pods.

- IAM service accounts: Google Cloud resources that allow applications to make authorized calls to Google Cloud APIs.

Applications running on GKE might need access to Google Cloud APIs such as Compute Engine API, BigQuery Storage API, or Machine Learning APIs.

Workload Identity allows a Kubernetes service account in your GKE cluster to act as an IAM service account. Pods that use the configured Kubernetes service account automatically authenticate as the IAM service account when accessing Google Cloud APIs. Using Workload Identity allows you to assign distinct, fine-grained identities and authorization for each application in your cluster.

We need to do four things to get this all to work:

roles/dns.admin role to IAM service accountStep #3 is covered by the Kubernetes manifest provided by ExternalDNS. We'll get to that shortly. For now, here's the Terraform we need for the other three steps.

Then we'll deploy the manifest from ExternalDNS with a few minor changes:

external-dns and deploy all objects within it.spec.nodeSelector includes an annotation to ensure that the GKE metadata server is enabled. The tutorial instructs adding the annotation imperatively.Now let's modify our ingress object to include the host test.vskrkube.net.

Let's have a look at the logs for the ExternalDNS deployment after applying that change.

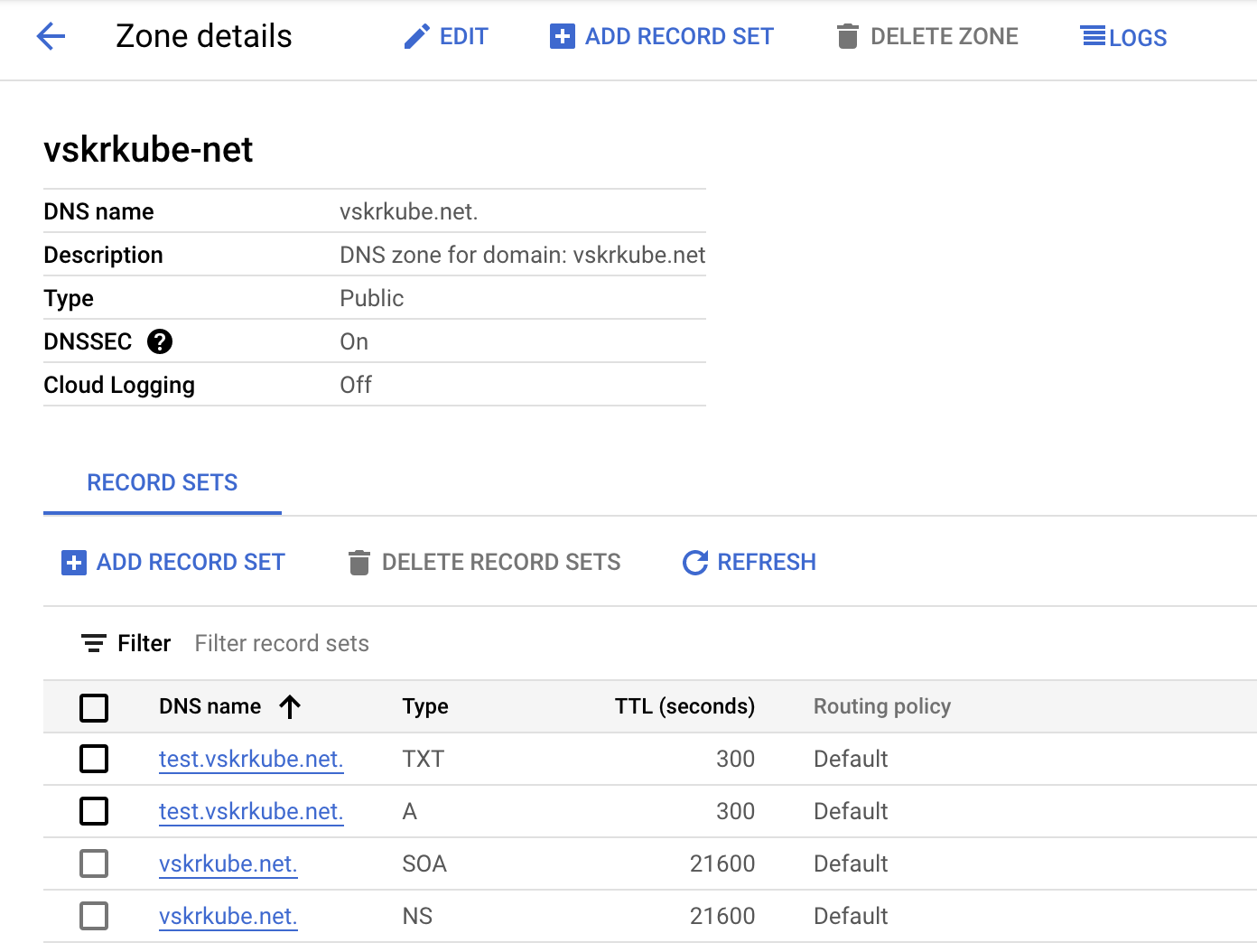

ExternalDNS reports that it has created DNS records for us. Let's have a look at the vskrkube.net Cloud DNS zone again.

It shows the records as expected. Let's make sure those DNS records are set up correctly.

Sure enough, the A record created by ExternalDNS points to the IP address of our GCP load balancer configured by the ingress1 object.

Now we can hit our application using the test.vskrkube.net host name instead of using the IP address.

What's cool about this approach is that we can change the host name in one step by modifying the ingress rules. That means we can change it to use test2.vskrkube.net and requests to that host name will begin to work automatically in a few minutes. It also means we can add new rules with new host names under the vskrkube.net domain and they will also work automatically.

While this is interesting, I'm not sure it's worth the complexity of configuring and maintaining ExternalDNS. It's also not very portable. Most of the steps taken here are specific to GKE, so migrating this work to another platform seems painful. Perhaps more portability would be possible if Kubernetes had a more native way to configure external DNS providers.

If I were just trying to get things done, I would manually manage DNS and use a static IP address. At least I had fun.